Poll Averages & Forecast update: Presidential Election

The 7 Battlegrounds

If you’re new, welcome. Please read the introduction.

If you’ve been here awhile, this introduction will be brief, and a little refresher never hurt.

Let’s go ahead and establish some basic truths that are still not properly understood in this field:

Polls are not predictions, or forecasts.

Poll averages are not predictions, or forecasts.

Forecasts are forecasts.

That all sounds easy and obvious, but when it comes time to apply those concepts, the “easy and obvious” is forgotten.

All of the field’s foremost experts judge poll accuracy as if they were predictions, and most of them have outright called polls and poll averages “predictions.”

There's no nice way to tell someone they're wrong. But they're wrong.

Polls are estimates of a candidate's base of support; polls are not predictions of a future value. Mistaking an estimate of a present value for a prediction of a future one is such a basic scientific error that it's embarrassing that I have to argue with people about it. I don't mind teaching or explaining it, don't get me wrong - I am happy to do so, I wrote a book.

I am not saying that this should be easy to understand, either. If it's taking you a minute to wrap your mind around it, ask.

Arguing about this with people who think they know but are easily proven wrong is frustrating.

But arguing with people who think they know and actively misinform people who want to understand is why I'm so unforgiving.

The data given by a poll tells us nothing about how undecideds will decide, and who might change their mind.

Which is to say, when I say “polls aren't forecasts, polls aren't predictions”

It might seem easy and obvious, even pedantic, until you see it’s clear that even the field's foremost experts don't understand that.

Our brain likes to take mental shortcuts, and insert “close enoughs” where they don’t belong. In the event that those “close enoughs” are bigger than we realize, and accumulate, suddenly we’re left with what appears to be a large “error” that couldn’t possibly have been ours. It must have been the polls.

This kind of sloppy thinking (which many analysts and forecasters hide behind) is one of many statistical literacy topics I hope to help correct. Book, Substack, Social Media, and maybe even in real life.

Simply put:

Going from poll to poll average to forecast requires many steps. “Close enough” is not even close. If you’re interested in understanding the depth to this process better, among some other poll and forecast related concepts (including some new ones I created just for fun), I’d strongly recommend my book here.

If you’re not interested in the book, you’ll just have to take my word for it. I don’t judge people who view polls and poll averages as forecasts* - but it’s a mistake in thinking that experts have caused and/or reinforced.

*With a very big exception for people who demand their analysis be taken seriously on the topic.

A VERY BRIEF word about polls:

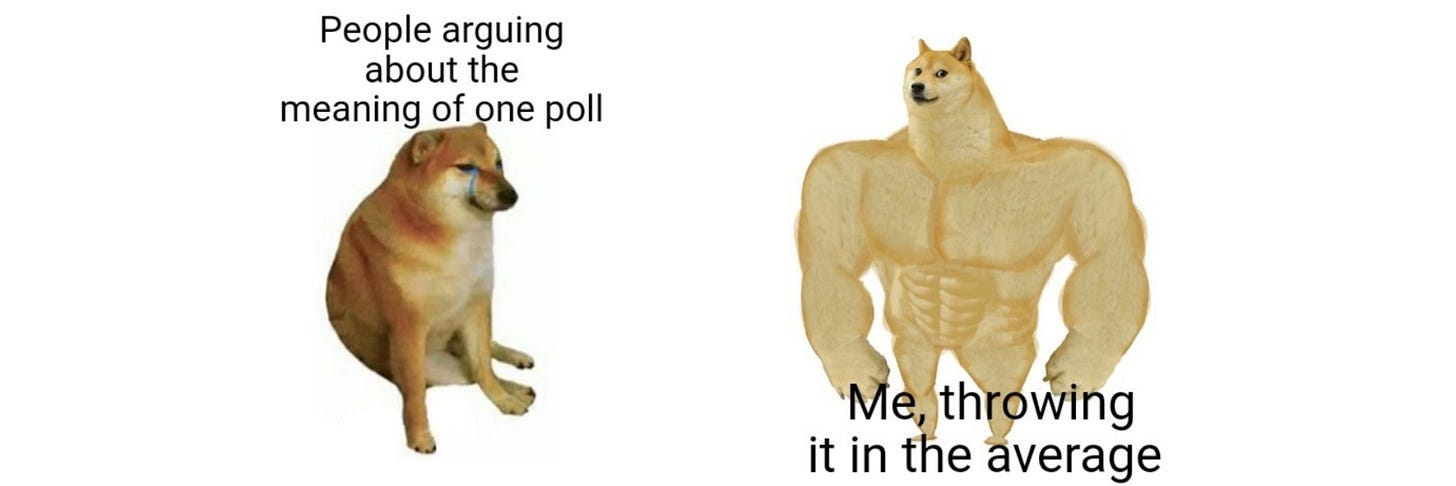

It’s the meme.

The best pollster in the world can only be so accurate. Given the best pollster in the world and 9 average ones, it’s highly unlikely that the best pollster will produce the most accurate polls. It’s just math.

I don’t care who was most accurate last election, or the last two elections. First of all, the field's current definition of accuracy is internally invalid.

Second trying to say which pollsters are “most accurate” (by any measure) based on one or two elections is searching for meaning in noise. No one who understands statistics would do it.

In order to have a good idea of who the most accurate pollsters are, you would need AT LEAST 10 elections (that’s 20-40 years, depending on whether we’re counting midterms) AND all of those pollsters would have to use the same methodology every year - which none of them do.

Use an average of pollsters who use a valid methodology and are transparent with their data.

But WHOSE average?!

Most people I talk to are, understandably, concerned with bad data polluting good data. It could happen. In 2022, it probably did.

Flooding is one of several real threats to poll average accuracy.

When some pollsters act with motivations other than accuracy in mind (like, getting media attention, praise from their party, or simply trying to make their preferred candidate look stronger in poll averages) this is a real threat.

Fortunately, it’s not hard right now to see who these bad actors are, and (unless you think it’s “not sharp” to account for that) you can do what I did and give them very little weight in your averages, or outright exclude them.

This is one of many reasons my book introduce a concept that, as far as I know, has never been discussed in the field:

Poll aggregator (or poll average) error.

Poll error, though poorly defined, is often discussed. Poll averages themselves are relatively new, but for whatever reason have avoided scrutiny. Not if I have a say in it :)

A poll average is supposed to give us a simplified but more accurate picture of what “the polls” say. True, and important, individual polls tell us very little, while a poll average can tell us much more.

But there is no objective way to take a poll average.

Which is to say, if one person who takes a poll average says “the polls say this” and someone else, who takes a poll average a different way, says “nuh uh, the polls say this!”

Who is right, and how do you know?

Longer version: book.

Short version: all aggregators have to make choices. Which polls to include, if some polls should count for more than others, how many polls to include in the average, and more. Those choices can be totally arbitrary or based on the best data in the world, but it doesn’t matter: those choices introduce error unrelated to “the polls” themselves.

While there is no objective way to take a poll average, most reputable averages will be pretty close. And you’d probably be surprised at how little of a difference there are in the allegedly advanced techniques used by modern analysts, and a generic recent average.

For research purposes, I always compare my numbers to others’. When I find substantial discrepancies, it allows me to dig deeper and find the cause(s) of those discrepancies, thus understand how they view the data (and if my methods are more sound, or if I’m missing something).

Finding some forecast discrepancies (despite nearly identical poll averages) is one of many steps that gave me enough confidence to be more outspoken about the quality of mine - getting ahead of myself.

I noticed the same thing happen with some big poll average discrepancies in 2022.

Let’s look at some poll averages. Remember, neither polls nor poll averages are predictions of election outcomes.

By most estimates, there are about seven battleground states: Michigan, Wisconsin, Pennsylvania, Nevada, Arizona, Georgia, and North Carolina.

I’ve compiled poll averages from various sources: 538, RealClearPolitics (RCP), Nate Silver, RealCarlAllen (RCA, that’s a me!), as well as some “simple recent averages.” A couple options were left blank if I couldn’t find them or there wasn’t enough data.

First up, Pennsylvania

Wisconsin

Michigan

Arizona

Nevada

North Carolina

Georgia

I will selfishly use only my poll averages for each swing state.

A poll average is an estimated base of support, not a prediction of the result.

Here is how I recommend visualizing all poll averages, mine or anyone's:

Saying someone is “ahead” even “right now” is not a good way to think about it.

They might not be.

Further, those “silver” undecideds (though the bar may look small) will eventually decide.

Poll Average Differences

As I stated, there is no objective way to take a poll average. But you may have noticed how similar all of these poll averages were.

Below, I compare the discrepancy from the 7 Battleground States to the “10 Poll Average.”

You can see RealClearPolitics has a pretty heavy R bias. Silver has a slight R bias.

The 20 poll average underestimates both candidate’s number just because it's still capturing higher-undecided polls from August. And we're still talking a difference of tenths of percents.

“Bias” does not mean “wrong” in this context, either. It's just a useful metric to see what data is given more weight.

This is one way I noticed Silver and RealClearPolitics poll averages being susceptible to flooding in 2022.

Forecast update

As you may have noticed if you follow me…not much has changed in the past couple weeks.

I don't promote meaningless movement, but if you squint, you'll see Harris' chances are up to 68% in my forecast, from 67%.

I will post state-by-state forecasted vote share, along with Senate updates, along with the House forecast, in the next week

Thanks for this important work distinguishing polls and predictions.

It makes me think of Daniel Kahnemann's work on human psychology.

We are biologically programmed to make mental shortcuts.

We substitute a harder question like who will win the election in 6 weeks, with an easier one like "who is ahead in the polls now". But we don't realize we have done the mental substitution.

So, great work pointing out this example of unconscious mental substitution in a very important and timely topic: this US election and polls.

EXTEMELY helpful sir. Thank you.