The margin of error is often cited, and poorly understood, even by experts.

Misunderstanding the literal foundation of inferential statistics should not inspire confidence that experts know what they're talking about.

This is one factor, of many, that have led me to conclude experts don't understand how polls work.

Concluding experts don't know how the thing they study works has, for me, a VERY HIGH standard of evidence. And it should. But they don't. There's simply no other explanation.

Recently upward failing G Elliott Morris published this in his recent book, calling polls “predictions”

The fact that neither he nor his reviewers saw an issue with this simple error-of-fact is troublesome.

It does nothing more than further the falsehood that polls are a tool intended to predict the eventual result. They're not. The public and media are powerless to correct this misinformation when the alleged experts don't even understand it.

The idea that polls are predictions about some future results has consequences. Specifically, regarding how the margin of error is understood.

The margin of error IS NOT some hypothetical, theoretical, nebulous calculation. It is objective, testable, repeatable, and (in ideal circumstances) can be known with certainty.

The formula for this has been known for at least 300 years. It's not new.

If you're thinking about the fact that political polls have limitations and are never “ideal circumstances,” you're right. But you're getting wayyyyyyyyyyy ahead of yourself. More than you realize. It's the same thing experts do that cause them to not understand something as basic as the margin of error.

The foundation for the margin of error is based on taking random samples from a population. In those circumstances (plus some important but not-important-enough for this post) the margin of error can be known with certainty.

The application of this “ideal” application - like figuring out the proportion of white to black marbles in an urn - must be understood before any more advanced calculations are done.

Here's the problem.

Marbles in an urn:

Do not change color (like voters might change their mind)

Are all either white or black. Are not undecided, like voters can be.

Analysts - alleged experts - try to treat POLITICAL poll data (which includes variable amounts of the above - sometimes a little and sometimes a ton) the same as poll data like marbles from an urn.

This is a devastatingly poor understanding of statistics.

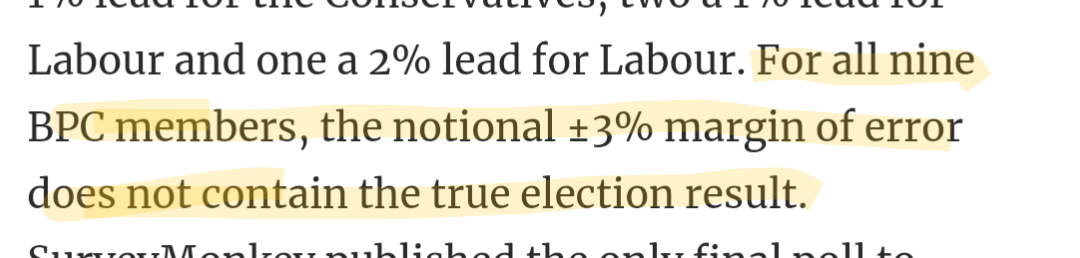

It leads to stupid ass statements like this one, from the British Polling Council, made up of more than a dozen experts, published in academic journals:

They literally don't understand what the margin of error is.

The margin of error does not apply to the eventual result.

It applies to a simultaneous census.

This term does not currently exist in stats or political science textbooks, but it will soon, because the current understanding of the margin of error in a poll is wrong.

What this means is that the data given by a poll (margin of error and all) only applies to that specific population, at the time it was asked.

If your poll observes:

45% A

45% B

10% undecided

That data says a SIMULTANEOUS CENSUS of that population (i.e. asking everyone the same question at the same time) should show, in 19/20 (95%) of Simultaneous Censuses:

45% +/- margin of error for A and B

And 10% +/- margin of error for undecided

Period. End of poll analysis. Nothing to do with what eventually happens.

You might contend that this feels a little “theoretical” in political polls - it kind of is - but remember the underpinnings of the margin of error are NOT theoretical. They require the ability to take a simultaneous census of the same population!

You can't do that in political polls, at least not precisely. The election is neither a census, nor simultaneous to any poll! If that's hard to wrap your mind around, I'll explain elsewhere.

The poll's error cannot be compared apples-to-apples to the eventual result, no one who understands basic stats would do that. Different populations, can't generalize. Stats 101.

And the margin of error has no application to the eventual result.

I have spent an extensive amount of time on these terminologies, how to teach and explain the differences between what I call “present polls” (no temporal effects) and “plan polls” (population of interest changes over time) but that's for a book.

For the record, American analysts fare no better.

The AAPOR, a collection of poll and stats experts, here in their published report say that since the “spread” or “margin” in the poll didn't match the “spread/margin” of the election, it must have been outside the margin of error.

Wrong.

It's trivial to prove this, mathematically, how and why it's wrong. I can honestly do it in about 270 characters. If you read anything I write, or have a 101 level understanding of stats, you probably can too.

Here's a slightly longer explanation of why it's wrong, with a visual.

Here's a sample poll:

What this data says is that (with 95% confidence) if a simultaneous census of this population (state, district, country, doesn’t matter) the result should fall in this range.

Period.

End of poll analysis.

What these idiots experts have seriously asserted, and badly misinformed the public and set the advancement of the field back 100 years, is that the EVENTUAL RESULT must be close to the “spread” between the two candidates, or the poll had an error.

In the 45%-45% poll, the spread is “0”

They say that the result must be approximately 50-50, or the poll was wrong, and the error is the poll's.

No.

This incorporates an ASSUMPTION about what undecideds should do which has nothing to do with the poll’s accuracy, or the margin of error, or what the poll reported.

Even a mild 60/40 split of undecideds (thus resulting in a 51-49 result) would be asserted as a 2 point error of the poll, barely within its “margin of error.”

The problem gets far more technical with more candidates, and less parity (i.e. one candidate with a large lead) and mangles the margin of error beyond recognition. Leading to dumbass statements like “polls were outside margin of error cause it didn't get result right.”

Regardless, that 2 point discrepancy (not error) is not a poll error, and has nothing to do with the margin of error. Undecided voters represent an enormous confounding variable for this pseudoscientific “spread” calculation, one that has no place in any field that calls itself scientific.

My goal is to fix this problem. I understand my being not nice about it probably hurts my chances, but it's not as though I didn't try that route for 8 years, only to be shoved away. So I'll do it my way.

As for folks who want a more tangible understanding of how the margin of error works, here's a helpful dice example:

If you roll two dice, you can expect this range of outcomes:

Polls are not much different. A good “poll” of dice would produce:

7 +/- 4.

About 95% of rolls should produce something between a 3 and 11.

Do you see the problem with reporting a poll as simply “7”?

That's not what the poll observed.

It observed 7 +/- 4.

When I put it in terms of dice, people start to understand a little better.

That poll also isn't saying 2s and 12s are never rolled - it's only saying that they're unlikely to be rolled. In fact, given enough rolls, 2s and 12s are inevitable!

The fact that alleged experts find it noteworthy that polls sometimes produce results outside the margin of error (even properly defined) is further evidence they don't know what they're talking about.

Good polls, even ideal polls, will produce results outside the margin of error about 1/20 times!

It's no different than rolling a 2 or 12.

Here is Morris, in the same chapter of his book, being wrong about polls again:

OMAGAHD THIS POLL WAS BAD LITERARY DIGEST

Brilliant analysis. I'm guessing he's similarly baffled when he rolls a 2 or 12 in Monopoly.

So f*ckin what? Polls sometimes produce results outside the margin of error. Someone who literally makes a living in this field should know better.

This field needs to do better, and thankfully for me, it's not hard to do so.

The only question is whether enough people - whether in the public or academia - care to help me make it better.