Nate Silver's weird stand

This isn't good work

I'll spare you the punditry angle, this will be a fairly short post.

I have positive overall consideration for Silver's work, and contribution to the industry, despite the fact that it has contributed to some fundamental misunderstandings about how polls work.

He's not dumb.

But he's increasingly staking his reputation on a historically volatile election.

His rise to fame - which is mostly justified tbh - came on the back of some decent analysis, fortuitous timing given the weaknesses of his model (low undecided, low third-party elections) and a clear-eyed perspective (Clinton wasn't a huge favorite).

But as far as I know, he never staked out any of his model's positions as the Indisputably Correct one.

First of all, it's July. Elections forecasts in July are not high data.

In election season, 3 months is a long time. Don't believe me?

The first seven jurors in Trump's hush money trial were selected three months ago. Not the trial starting, not the verdict…the first jurors were selected.

Now, when it comes to forecasting, time is a hell of a drug. It can *feel* like “now is forever” when you're looking at poll numbers, but the only thing we know is that we don't know.

Here, Trump's internal polling from 2020, his reelection appeared to be a lost cause. It ended up close.

In 2024, that “now is not forever” mentality seems truer than ever. Controversy around the Democratic Nominee, and (space saved for even bigger scandal or news that will be bigger than any other previous) mean things will change from July.

For that reason, I have been VERY open about the difficulty - and awareness - of forecasting this election. I think Biden is a favorite (and I promise my forecast will be out soon).

But all forecasts have what I think of as “uncertainty cones.”

Too many things could, might, and will happen that would allow me to confidently give a narrow, specific win probability right now.

Here is a visual of what I think of with “uncertainty cones”

If someone asserted that the race were a true tossup - 50/50 either way - the way you interpret that probability probably isn't filtered through this lens.

A very good forecast in a high data event will have an uncertainty cone like this.

If I say something is 50% to happen, and it is actually 45% or 55%, that's not a big deal. Good forecast.

It's only even remotely, theoretically possible to be this confident very close to the election. The week before MAYBE, more likely the day before, or the day of.

Anyone who asserts a greater level of confidence than this (basically that their forecast is almost certainly within +/- 5% of the true win probability) is lying to both you and themselves.

And that's very close to the election!

In reality, I think this number (for very good political forecasts) is probably closer to 10%. It's hard, and requires a lot of assuming/estimating.

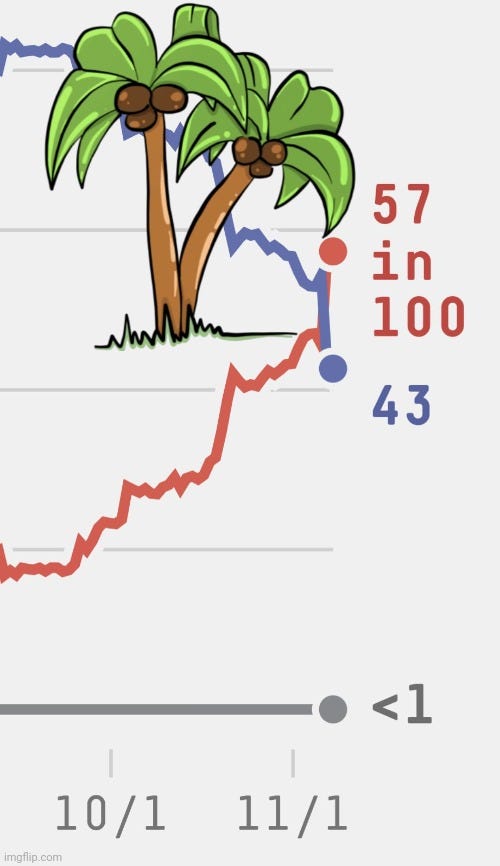

It's a very bad look for Nate's model when it does this literally overnight in the 2022 Pennsylvania Senate forecast (coconut tree reference intentional)

But he wants to simultaneously claim his forecast in JULY can't be miscalibrated?

He thought Fetterman was an 80% favorite in October, but only 43% a month later.

Months (plural) from the election, no one can be particularly confident in their forecast.

Not if they're being honest.

Here's what Silver has updated to recently:

He went from 1/3 for Biden to win, approaching 1/4 recently

At 538 meanwhile, hovering around 1/2.

On paper, these forecasts seem irreconcilable. With a proper understanding, they're not irreconcilable at all. Nor does it mean the “true answer” must be in between.

If Biden’s “true win probability” were 90%, or 10%, neither model can honestly rule that out right now.

So if my forecast says Biden is about 67% to win (higher than both of Silver and 538) doesn't that mean I think Silver's forecast *must* be terrible?

No.

It's too early.

27% or 97% are well within my July cone of uncertainty.

We won't get good data until September, and even then, there will be a lot of uncertainty.

Now, a forecast moving from 57% to 43% overnight, the day before an election as his did, absent some major event (there wasn't one) is indicative of a forecast that is not well-calibrated.

In that election, I rated Fetterman as a 65% favorite. I was pretty confident in my direction, but 57% even very close to the election wasn't different enough for me to care.

But when they moved it to 43%, I had some things to say (and hypothetical bets to make, thanks Nate!)

Regardless, here's the problem:

By staking out a position that is not based in data, Silver is introducing unnecessary (and unreliable) risk to his forecasts.

Here, he's criticizing the (new) FiveThirtyEight model for overweighting certain fundamentals.

What? You think we should weight polls extremely heavily in JULY?

When many voters don't care about politics yet, and lots more are undecided?

Oh wait. Apparently…yes.

I think Nate would benefit from some simple lessons in forecasting. Namely:

But here's the problem.

There are, by my estimation, only a few possibilities. I'll exclude my forecast for simplicity and because it's not out yet:

Silver's forecast is better, nothing much changes, and it remains relatively consistent up to election day (with Trump as the favorite). This “consistent forecast” is highly unlikely, given its historical volatility.

FiveThirtyEight’s forecast is better, things change in Biden's direction, and Silver has to either stake out an untenable position, or admit his forecast was bad…or claim his forecast wasn't bad but whatever happened was very unlikely or unforeseeable

Silver's forecast is better, things get worse for Dems, and FiveThirtyEight's forecast converges on his.

FiveThirtyEight’s forecast is better, things get worse for Dems, and Nate has to choose whether to let their forecast converge on his, or make it even more aggressively low probability for Biden.

To me, there's no shame in 2 or 3. 1 and 4, moreso.

I have Biden as a favorite now, and maybe by election day he won't be. Maybe my forecast is bad, or maybe it's good but things will change between now and then - it's fine, there's no shame in uncertainty.

But Silver is staking out a position that is basically precluding the possibility that things could get better for Biden, months before the election.

Given that both the old guy and the new guy at 538 have had extremely volatile forecasts (Dems are slight underdogs to keep the Senate…no they're huge favorites! Okay they're medium favorites…no they're slight underdogs again)

I wouldn't be comfortable staking out any position this early, with so much still uncertain.

Have you looked at 538’s model?

Nate’s (and many others’) issue with 538 isn’t that it might be wrong.

Their issue is that it’s clearly broken. Or at the very least, in the early stages of beta testing with lots of bugs that haven’t been worked out.

Its predictions make no sense, some of which are mathematically impossible.

And its assumptions make little sense, strongly weighting historical state-level polling but without accounting for how the demographics of states change over time.

Not to mention the fact that state-level polling tends to be sparse, especially historically and especially in non swing states.

538 might end up being right...but that doesn’t mean it’s good.

Would love to see your forecast when it comes out, specifically what you define as the "fundamentals". In my admittedly amateur experience, there's no agreed upon set of fundamentals nor the appropriate sliding scale to weight them. And I totally agree with your view on polls. I have spent countless hours telling people that polls in July are about as accurate as an Imperial Stormtrooper in Star Wars.