Nate Silver seems like that guy who shows up to the potluck empty-handed and criticizes everyone else's dishes.

I'll keep this article short, sweet, and to the point.

Silver believes pollsters should act in a way that is in his best interest, even if it is against their best interest.

This is not a new pattern of behavior, nor is it unique to herding.

Here, he criticizes Patrick Murray of Monmouth University Polling for not reporting poll data in a way Nate would prefer.

Note - the way Nate wants the numbers is the proper way.

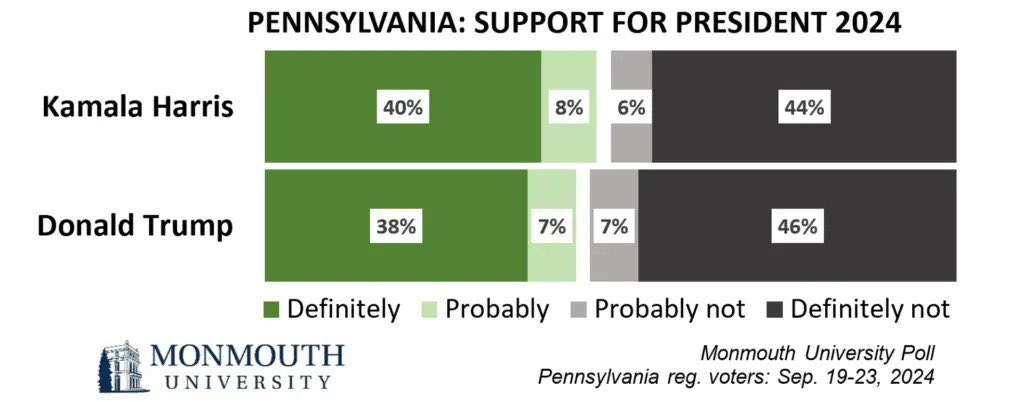

Here is the data Nate was referring to, by the way.

That's excellent data for a good analyst, for what it's worth. It just doesn't fit neatly into his averages, so he doesn't like it.

Speaking of averages

Yeah, herding is a real problem

Herding being a concern to the reliability of poll data (and poll averages) isn't new.

FiveThirtyEight via Nate wrote about it a decade ago. Good research.

I wrote about it in my book to help explain the competing interests that individual pollsters have, compared to poll aggregators.

Nate wrote about it again recently.

So, if it's a real problem…then why should he shut the f**k up about it?

Because

It's

His

Fault

It's his fault! He popularized the already unscientific (and provably false) method of measuring poll accuracy by how well it “predicts the margin” of the election result.

And then, in a proud demonstration of his statistical ignorance, he gives these pollsters a grade by how well they perform (by this unscientific metric) based on individual elections.

Yes, he (and the consensus of the field's analysts) literally think polls whose margins are closer to the election margin are more accurate (and that his average is an objective measure of what The Polls say).

Maybe you've put together why herding is his fault, maybe not. I promised to stay brief.

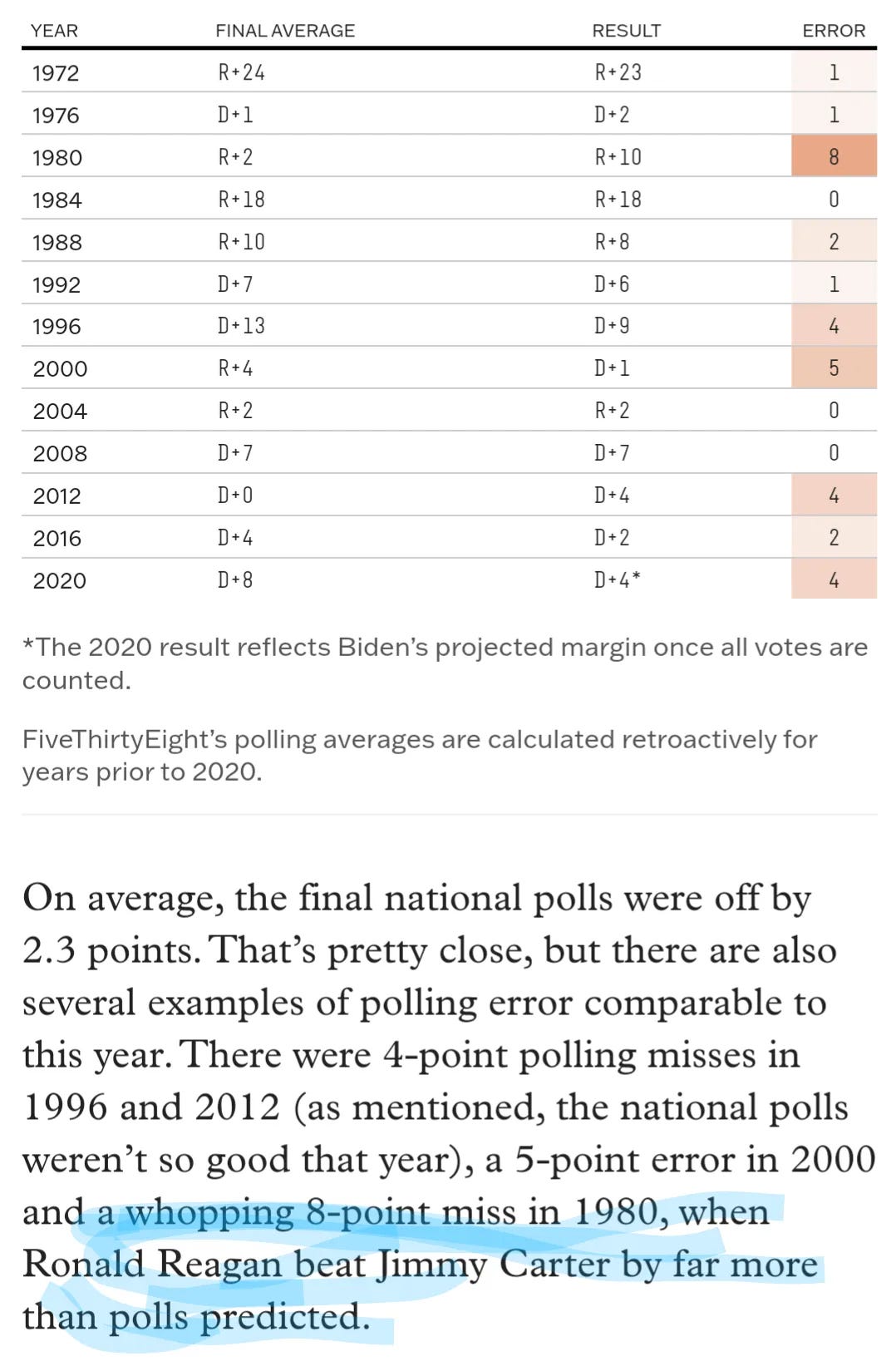

Here are two charts from the article he published in 2014.

The first one basically shows why taking a poll average is preferable to looking at individual polls, and why independent data will make a poll average more accurate, compared to “herded” (not independent) data.

But!

That herding makes individual pollsters appear more accurate (again, by his preferred metric) at the expense of an accurate poll average!

If your reported data is not herded, it naturally means that you'll occasionally report an outlier, or something close to it.

For an average, this is useful, because outliers should - in the long run - cancel each other out (but may allow you to see, in a close election, who has a slight edge).

But if your reputation is being largely formed on a tiny sample of one election, for the one or two polls you conduct closest to the election, and your poll produces results three or four points off the average, what exactly is your incentive for reporting that data as such?

Nate says: but it's better for my average.

And the pollster says: but this is better for my company.

They're both acting selfishly, but as I've previously stated on the topic of unaccountable quants:

The pressure only goes one way. The pollsters are criticized for their methods, but the aggregators and analysts are never criticized for theirs. Despite, you know, pollsters (mostly) doing legitimate work, and the analysts doing indefensibly poor work.

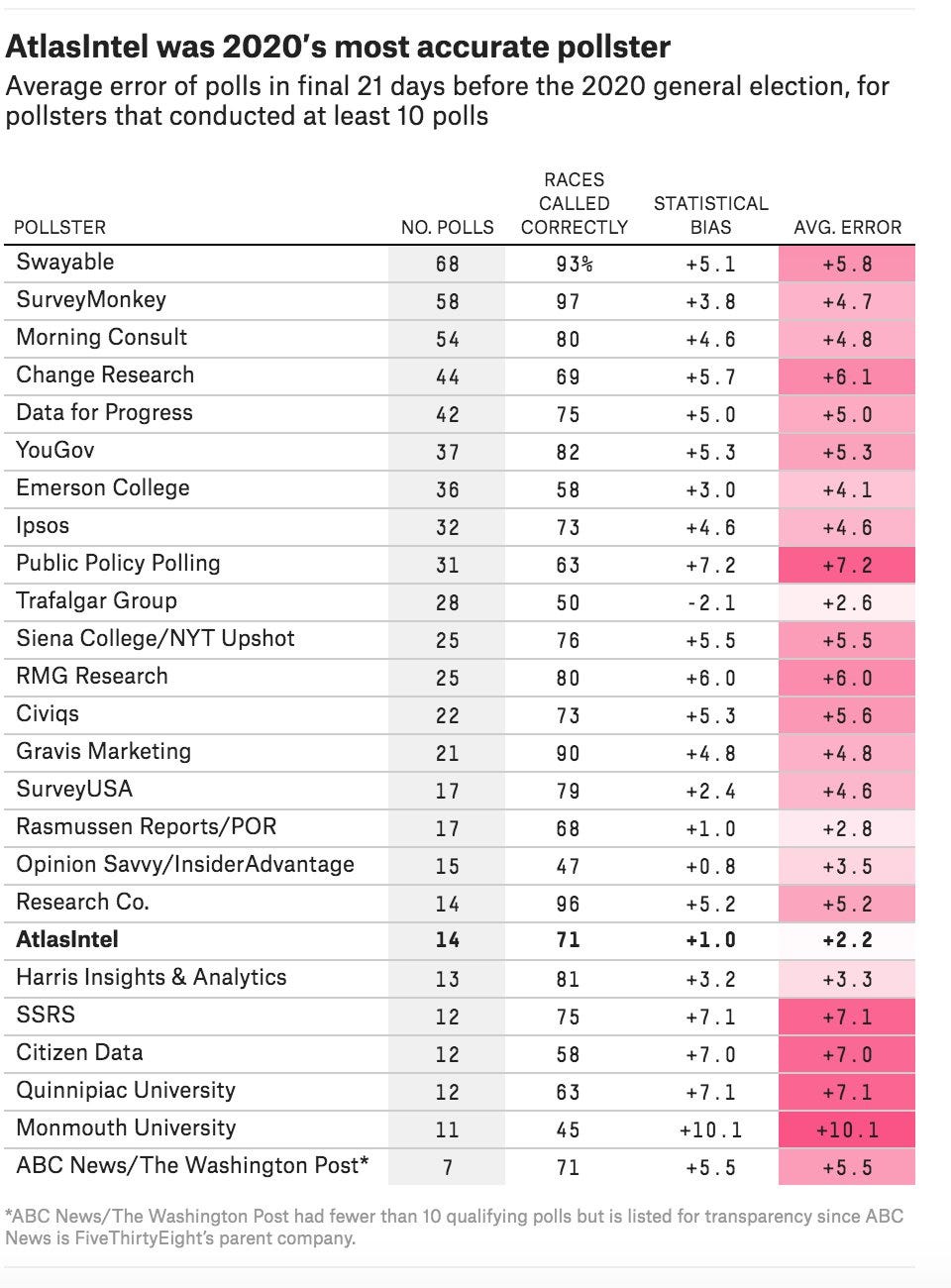

When you share charts like this after every election, you're telling pollsters how they'll be rated and judged.

It's a badge of honor for the ones who are highly rated. They aggressively promote these junk standards on their websites. Why wouldn't they?

Whether they achieved this by skill or luck (or a blend) doesn't really matter. This metric said they were accurate.

I summarize this problem in my book:

One large group of people, pollsters, use a tool to collect and report data. Then another group of people, analysts, have created an unscientific set of standards by which they judge poll accuracy. These analysts have substantial media and public influence, and as such, each pollster will be judged according to these unscientific standards- and their reputation impacted on that basis.

The pollsters know the criteria on which their data will be reported and judged, thus creating the dilemma: report the data as it is, or in a way that protects your reputation?

This dilemma can be solved.

It requires

Establishing proper scientific standards by which poll accuracy can be judged, and (in the same “statistical literacy” line of thinking)

Understanding that, no, one or two elections is not a sample size large enough to determine who the best pollsters are.

But for as long as Nate wants to promote his internally invalid definition of poll accuracy, he has no grounds to get mad at pollsters for playing the game by his dumb rules.

(If there's only one link you click in this article, I'd strongly recommend the “unaccountable quants” one - it links to something I wrote several months ago but perfectly outlines how much luck goes into these “accuracy” grades.)

Update because Nate's still talking.

Here is literally what Nate's doing. Literally.

1) Judging pollster accuracy by how well their poll margin predicts election margin (which is an unscientific metric)

2) Crying about the methods pollsters are using to try and get their poll margin to better predict the election margin

It's him. Hi. He's the problem, it's him.

(He's the biggest part of the problem, anyways)

And in case you were wondering if my book is worth buying.

Yes, this too, I called it out -

While I'm trying to solve the problem, Nate is just going to keep crying about it, either ignorant or dismissive of the fact that his own standards are the whole reason pollsters would herd in the first place.

I am shocked ( but totally unsurprised) at the Iowa poll. Women — all colors, all political parties and all ages — have been engaged & enraged post-Dobbs. Oh, and lots of folks haven’t forgotten January 6th.

Yet the pollsters couldn’t seem to figure out that perhaps more women would turn out. Or perhaps there’d be a ton of Republican crossover vote for Harris.

I had to read a TON of articles about the “bro vote” and the importance of Joe Rogan. Yet even I know the “ young bro” demo is the least likely to vote.

But women? Esp women born in the 1950s and 60’s like me? WE VOTE. And we don’t vote for corrupt traitors, rapists or fraudsters. Esp when they’re the same person.

Thank you for this. I have been trying to put my finger on what feels so wrong about the poll aggregators, especially after reading Nate’s article on herding today. I don’t have the stats background to fully delve into the issues, but this analysis captures the conclusion that’s been rumbling around in my head: it’s unscientific but with a veneer of science to try to pass as such. The same as the polls themselves.